Tutorial: Corner Detection and Optical Flow

I. Introduction

In this tutorial, you will learn how to find corners to track an object using OopenCV. To detect corners, Harris corner detection method will be used. To track an object, optical flow tracking method (Lukas-Kanade-Tomasi) will be used.

II. Tutorial

Download Test Image Files: Image data click here

Part 1. Corner Detection

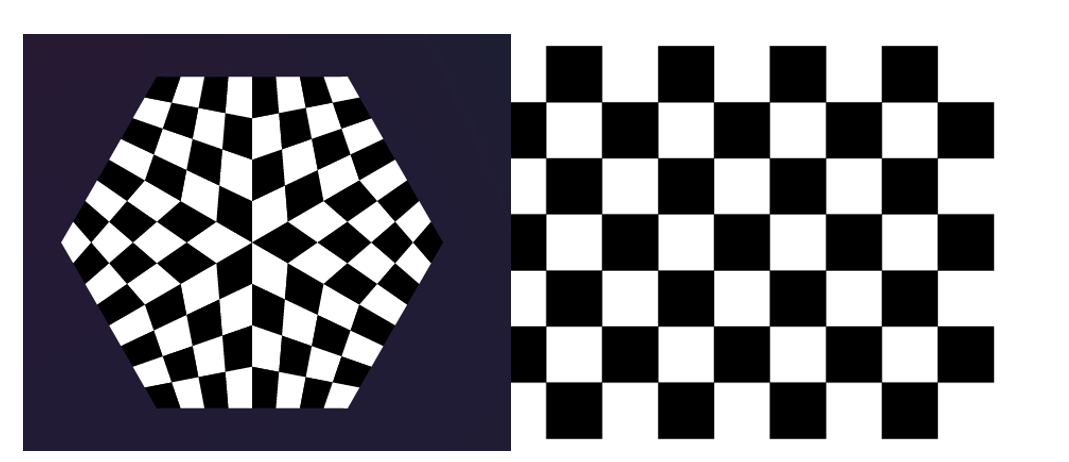

We will learn how to use Harris Corner Detection to find corners, which is an important algorithm for the camera calibration that requires to find chessboard corners.

OpenCV function of Harris corner detection: read docs

Harris corner detector.

The function runs the Harris corner detector on the image. Similarly to cornerMinEigenVal and cornerEigenValsAndVecs , for each pixel (x,y) it calculates a 2×2 gradient covariance matrix M(x,y) over a blockSize×blockSize neighborhood. Then, it computes the following characteristic:

dst(x,y)=detM(x,y)−k⋅(trM(x,y))2

Corners in the image can be found as the local maxima of this response map.

Parameters:

· src – Input single-channel 8-bit or floating-point image.

· dst – Image to store the Harris detector responses. It has the type CV_32FC1 and the same size as src .

· blockSize – Neighborhood size (see the details on [cornerEigenValsAndVecs()](http://docs.opencv.org/2.4/modules/imgproc/doc/feature_detection.html?highlight=cornerharris#void cornerEigenValsAndVecs(InputArray src, OutputArray dst, int blockSize, int ksize, int borderType)) ).

· ksize – Aperture parameter for the [Sobel()](http://docs.opencv.org/2.4/modules/imgproc/doc/filtering.html#void Sobel(InputArray src, OutputArray dst, int ddepth, int dx, int dy, int ksize, double scale, double delta, int borderType)) operator.

· k – Harris detector free parameter. See the formula below.

· borderType – Pixel extrapolation method. See [borderInterpolate()](http://docs.opencv.org/2.4/modules/imgproc/doc/filtering.html#int borderInterpolate(int p, int len, int borderType)) .

Tutorial Code:

Part 2. Optical Flow: Lukas Kanade Optical Flow with pyramids

OpenCV function of Harris corner detection: read docs

Calculates an optical flow for a sparse feature set using the iterative Lucas-Kanade method with pyramids.

Tutorial

Declare variables for Optical flow functions such as the previous and current images(gray scaled), point vectors and term criteria

Read the current video image and convert to gray scaled image

First, initialize the reference features to track. Initialization is run only once, unless the user calls this function again.

Run Optical flow and save the results on the current image (gray, points[1])

Draw found features and draw lines

Swap current results to the next previous image/points

Show the result image

Tutorial Code: see code here

Last updated