PointNet

PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation: Charles R. Qi, Hao Su, Kaichun Mo, Leonidas J. Guibas

Introduction

The main problem with point cloud deep learning is that typical convolutional architecture requires highly regular input data format, like image or temporal features. As pointcloud are not in regular format, the common approaches are to transform the data to regular 3D voxel grid or projections.

renders the resulting data unnecessarily voluminous

introducies quantization artifacts that can obscure natural invariances of the data.

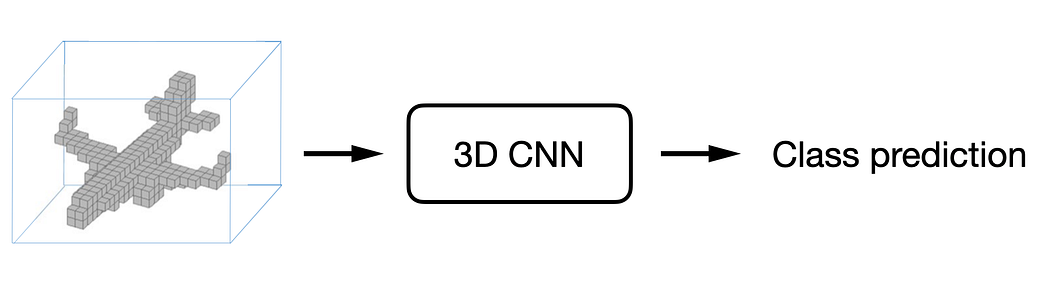

Example:

Idea: generalize 2D convolutions to regular 3D grids

image from: arxiv paper

The main problem is inefficient representation: cubic voxel grid of size 100 will have 1,000,000 voxels.

Work with point clouds instead

Pointnet was the initial approach for novel type of neural network that directly consumes unordered point clouds, which also takes care of the permutation invariance of points in the point cloud.

A novel deep net architecture that consumes raw point cloud (set of points) without voxelization or rendering.

A point cloud is just a set of points and therefore invariant to permutations of its members, necessitating certain symmetrizations in the net computation. Further invariances to rigid motions also need to be considered

Permutation invariant: a model that produces the same output regardless of the order of elements in the input vector.

e.g. MLP.

CNN, RNN is NOT permulation invariant.

Key to our approach is the use of a single symmetric function, max pooling. Effectively the network learns a set of optimization functions/criteria that select interesting or informative points of the point cloud and encode the reason for their selection.

Contribution

We design a novel deep net architecture suitable for consuming unordered point sets in 3D;

We show how such a net can be trained to perform 3D shape classification, shape part segmentation and scene semantic parsing tasks;

Network

For simplicity, it’ll be assumed that a point in a point cloud is fully described by its (x, y, z) coordinates. In practice, other features may be included, such as surface normal and intensity.

There are three main constraints:

Point clouds are unordered. Algorithm has to be invariant to permutations of the input set.

In other words, a network that consumes N 3D point sets needs to be invariant to N! permutations of the input set in data feeding order.

Network must be invariant to rigid transformations.

For example, rotating and translating points all together should not modify the global point cloud category

Network should capture interactions among points

It means that points are not isolated, and neighboring points form a meaningful subset.

Classification network

k classes output scores

max pooling from nx1024 to 1024 ;

nx1024: number of points (local) --> 1x1024: global feature

Unordered: use symmetric function

+, * is a symmetric function.

Used max pooling as the symmetric function.

used shared MLP (h) --> max pooling (g) to get global feature in R^1 dimension.

More note on Symmetry Function for Unordered Input unordered points

The biggest issue when doing deep learning w/ point cloud is that deep learning requires ordered input wheras pointcloud are unordered set.

Local and Global Information Aggregation

A combination of global and local knowledge is achieved in segmentation network to rely on both local geometry and global semantic

In segmentation network, it concatenated global feature(1x1024) to each of (nx64) to get [ n x(64+1024)]

Joint alignment network

Under rigid tranformation, the semantic labeling should be invariant.

Option 1) align all input set to a canonical space before feature extraction.

This paper used T-net.

It is a mini-network that predicts an affine transformation matrix. Apply the transformation on the coordinates of input points.

It resembles the big network of (1) point independent feature extract (2) max pooling (3) fully connected layers

Implementation

The classification model from the original paper in Google Colab using PyTorch. Read this for detail****

You can find the full notebook at: https://github.com/nikitakaraevv/pointnet/blob/master/nbs/PointNetClass.ipynb

Reference

Last updated